A student workshop is held in the 9th week of the REU program. Here are the previous workshops.

Summer 2018 Extension Workshop

Presented Projects

- "Classifying pseudoknots in RNA using graph theory" by Benjamin Hermus (College of Staten Island). Mentor: Dr. Louis Petingi.

- "Clustering graphy theory algorithms and applications to computational vision" by Egor Semeniak (College of Staten Island). Mentor: Dr. Louis Petingi.

- "Parallel implementation of DES encryption algorithm using MPI" by Leslie Inahuazo (Southern Connecticut State University). Mentor: Dr. Xiaowen Zhang.

Abstract and Presentation Video

"Classifying pseudoknots in RNA using graph theory" by Benjamin Hermus (College of Staten Island). Mentor: Dr. Louis Petingi.

In this project we intend to continue with the study of properties of RNA (Ribonucleic acid) secondary structures (2D) represented as dual graphs. A RNA secondary structure is composed of a primary sequence of consecutive base pairs (i.e., adenine, guanine, cytosine, and uracil), and secondary base pairs or stems connecting non-consecutive bases. Pseudoknots are complex RNA structures, which define biological properties of RNAs representing intertwining of two or more non-consecutive base pairs. In previous work a partitioning algorithm was implemented, which partitions the RNA into fragments (or subgraphs) to identify pseudoknotted or pseudoknot-free regions. This technique allows the systematic analysis and classification of RNAs. The mentor is presently working with Dr. Tamar Schlick, from the Department of Chemistry, and Courant Institute, of New York University, whose research team is pioneering the analysis of RNA structure and function. During the 2018 summer, the partition algorithm was extended to classify different pseudoknots (i.e., recursive vs. non-recursive pseudoknots).

"Clustering graphy theory algorithms and applications to computational vision" by Egor Semeniak (College of Staten Island). Mentor: Dr. Louis Petingi.

Many real-life problems deal with collections of high-dimensional data, such as images consisting of billions of pixels, text. To process these data one needs the computational time and memory requirements of algorithms. The main goals of segmentation are to split an image into segments having similar features or attributes. One of the possible solutions is to represent an image/data as the similar smaller size, edge-weighted graph, where the vertices represent individual pixels the edges neighborhood relations, and the weights on the edges reflect the similarity between pixel appearances. In this project similarity-based clustering algorithms are implemented using graph theoretical techniques such as random walks. The clustering and segmentation of images or videos are the fundamental and difficult problem in computer vision, big data, and pattern recognition.

"Parallel implementation of DES encryption algorithm using MPI" by Leslie Inahuazo (Southern Connecticut State University). Mentor: Dr. Xiaowen Zhang.

Some big data may contain a lot of sensitive and confidential information. In order to achieve data security and confidentiality we need to encrypt the data efficiently. For bulky big data encryption, typically we use block ciphers. For improve the encryption throughput, as a prototype implementation we convert the regular DES (data encryption standard) algorithm to its parallel version by using MPI (message passing interface) library on a high performance computing cluster. The mode of operation that can be easily parallelized is ECB (electronic codebook), which allows encryption of multiple blocks of data simultaneously. But ECB mode does not have diffusion property among blocks, does not hide patterns (an identical plaintext block always encrypts to the identical ciphertext block), is subject to simple block substitution, replay, and other attacks. In this project, we also plan to combine ECB with other secure modes in the parallel implementation to address these weaknesses.

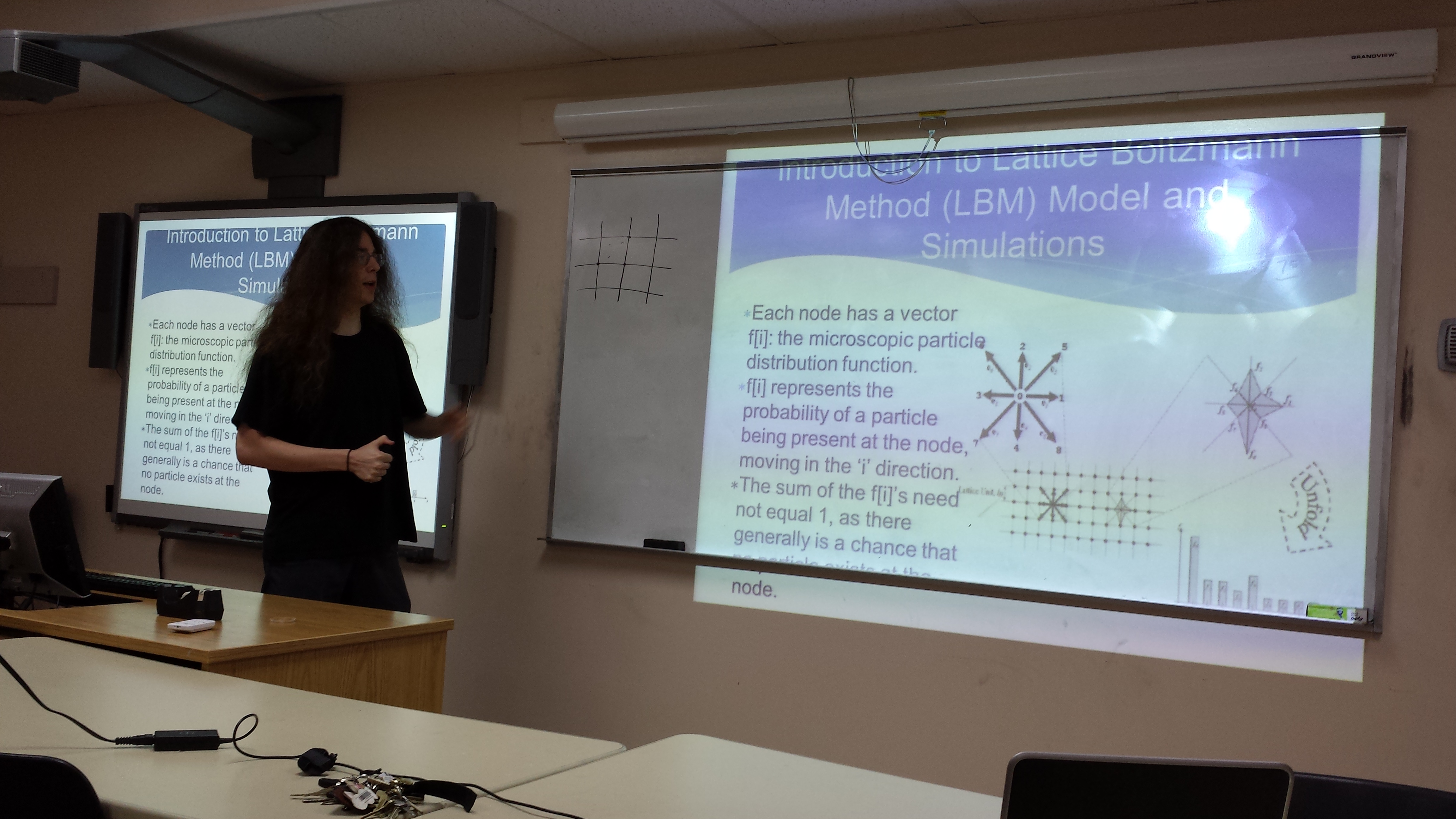

Presentation Photos

Summer 2017 Workshop

Presented Projects

- "Parallel hash collision search by rho method with distinguished points" by Brian Weber (Univ. of Maryland at Baltimore County) and Samantha Morris (Univ. of Central Florida). Mentor: Dr. Xiaowen Zhang.

- "Analysis of reliability-increasing topological transformations of communication networks to contemplate diameter constraints using parallelism" by Perla del Castillo (Univ. of Central Florida) and Robert Luke Zeller (North Carolina St. University). Mentor: Dr. Louis Petingi.

- "Automatic cell counting in microscopy images using convolutional neural network" by Claudia Bergeron (College of Staten Island-CUNY) and Richard Gandolfo (Hunter College-CUNY). Mentor: Dr. Shuqun Zhang.

- "Performance Analysis of Parallel Particle Filters" by Ali Mohamed (College of Staten Island-CUNY) and Linda Nguyen (San Jose State Univ.). Mentor: Dr. Feng Gu.

Abstract and Presentation Video

"Parallel hash collision search by rho method with distinguished points" by Brian Weber (Univ. of Maryland at Baltimore County) and Samantha Morris (Univ. of Central Florida). Mentor: Dr. Xiaowen Zhang.

In this project, we realized a memory efficient general parallel Pollard’s rho method for collision search on hash functions introduced by Oorschot and Wiener in 1997. This utilizes the principles of the birthday paradox to greatly increase the probability of a finding a collision, while using significantly less memory than the classic birthday attack, and allowing a larger portion of the subject hash function to be searched before running out of memory by saving only a few select digests called distinguished points. Using our implementation, we are able to find an average of 50 MD5 half collisions in the first hour of searching using a distributed memory high performance computing system called Penzias (one of CUNY HPC systems) on 32 processors. We then extend the technique with Cyrillic character replacement to search for meaningful MD5 half collisions. Next we analyze and measure how the performance of our implementation scales with different processor counts. Finally, we experiment with how the rarity of distinguished points affects the rate at which collisions are found at varying numbers of processors.

"Analysis of reliability-increasing topological transformations of communication networks to contemplate diameter constraints using parallelism" by Perla del Castillo (Univ. of Central Florida) and Robert Luke Zeller (North Carolina St. University). Mentor: Dr. Louis Petingi.

A communication network modeled as a undirected (directed) graph G = (V, E) where V is the set of vertices and E is the set of edges of G. Given a set of terminal vertices K of V (also called participating nodes), where each edge e of G is assigned a probability of operation re, the classical reliability measure, RK(G), introduced in the 1970s, is the probability that after deletion of the failed edges, each pair of terminal vertices u and v, is connected by an operational path. The Diameter-constrained reliability RK(G,d) (DCR), a generalization of the classical reliability, and introduced in 2004, gives the probability that given a diameter bound d, for each pair of terminal vertices u and v, at least an operational path of length d or less that connects u and v. This is applicable when assessing, for example, the reliability for any packet-oriented network where links may fail and a "time-to-live" (TTL) limit is specified in the number of hops that can be traversed by any given packet (for instance IPv6 packets include a hop limit field). As the classical reliability measure does not capture the distances between the network’s vertices, the DCR can be applied to assess the probability of establishing or maintaining a connection by setting, for example, the diameter bound d equal to the maximum number of hops to be visited by a packet or request. As the evaluation of the DCR is an NP-hard problem, students implement exact evaluation algorithms to calculate the DCR, such as Moskowitz's Factoring Theorem, and parallelize it using MPI libraries in C++, to improve the computational complexity. Then reliability-increasing graph transformations such as Kelmans' topological surgery are explored within the context of the DCR to modify a given network into a more reliable one; this transformation was originally introduced within the frame of the classical reliability but never studied when diameter constraints are under consideration. Theoretical results yielded during the 10 week program are also discussed.

"Automatic cell counting in microscopy images using convolutional neural network" by Claudia Bergeron (College of Staten Island-CUNY) and Richard Gandolfo (Hunter College-CUNY). Mentor: Dr. Shuqun Zhang.

Cell counting is an important process in the field of biological and pathological image analysis. It is still performed manually in many labs, and it is necessary to develop an automatic counting method to obtain accurate and consistent results. Most of the existing methods are based on object detection and segmentation, which cannot achieve satisfactory results due to low quality of images, noise, overlapping cells, and complex background and shapes in microscopy images. Modern machine learning methods especially deep learning have recently revolutionized the field of image processing and computer vision. This project explores the use of deep learning approach in cell counting to obtain more robust results. We evaluate the performance of deep convolutional neural networks on cell counting task, and compare the speed of GPU and CPU implementations.

"Performance Analysis of Parallel Particle Filters" by Ali Mohamed (College of Staten Island-CUNY) and Linda Nguyen (San Jose State Univ.). Mentor: Dr. Feng Gu.

Particle filters are widely used in data assimilation of simulation systems, which are sample-based methods using Bayesian inference and sampling techniques to recursively predict the state of the system with given observations. Practically, a large number of particles are needed to guarantee the convergence of particles, especially for large-scale dynamic systems. Therefore, parallel particle filters are needed to improve the performance by deploying particles on different processing units. In the three main steps of particle filters, sampling and weight computation have no data dependency between processing units. However, resampling needs the global processing, resulting in communication costs. To decrease the communication cost, various particle routing policies are proposed, such as minimal transfer particle routing and maximal balance particle routing policy. In this work, we systematically analyze the performance of parallel particle filters with various routing policies and show their effectiveness.

Presentation Photos

Summer 2016 Workshop

Presented Projects

- "Estimation of the Network Reliability for the Rare Case Phenomenon" by Jessica Kawana (Willamette Uinversity) and Steven Wojcik (CUNY - College of Staten Island). Mentor: Dr. Louis Petingi.

- "Finding hash collisions using MPI on HPC clusters" by Melisa Cantu (Texas State University - San Marcos) and Joon Kim (CUNY - College of Staten Island). Mentor: Dr. Xiaowen Zhang.

- "Acceleration of saliency detection and segmentation using GPU" by Genesis Rosado-Martinez (University of Puerto Rico at Arecibo) and Colin Hansen (Buena Vista University). Mentor: Dr. Shuqun Zhang.

- "Adaptive algorithm in improving the performance of distributed and parallel particle filter" by Lixin Huang (Syracuse University) and Evan Ferguson-Hull (Bates College). Mentor: Dr. Feng Gu.

Abstract and Presentation Video

"Estimation of the Network Reliability for the Rare Case Phenomenon" by Jessica Kawana (Willamette Uinversity) and Steven Wojcik (CUNY - College of Staten Island). Mentor: Dr. Louis Petingi.

The system under study is a probabilistic communication network represented by a undirected graph G = (V,E), where V and E are the set of nodes and edges of G, respectively, and where edges fail independently with known probabilities (nodes do not fail). Given a set T ⊆ V of terminal nodes, the classical reliability, RT(G), is the probability that each pair of terminal nodes is connected by a path, regardless of its length. The Diameter-constrained reliability (DCR), RT(G,d), introduced in 2001, imposes a bound d on the length of paths connecting each pair of terminal nodes, thus representing then a generalization of the classical reliability. As computation of the DCR is an intractable problem (i.e., NP-hard), Monte Carlo (MC) techniques have been successfully applied to estimate the reliability. A MC sampling technique called the Crude Monte Carlo (CMC), was implemented by our 2015 REU students and parallelized using MPI; however these techniques are not usually efficient for highly reliable or highly unreliable networks because of the so-called rare-event phenomenon (i.e., edges fail with probabilities approaching either 0 or 1). This year we implement a MC Variance Reduction technique (MCVR) in which pre-computing information about a topology is given, and therefore MC sampling can then be restricted to a smaller range, reducing as a result the variance of the reliability estimator.

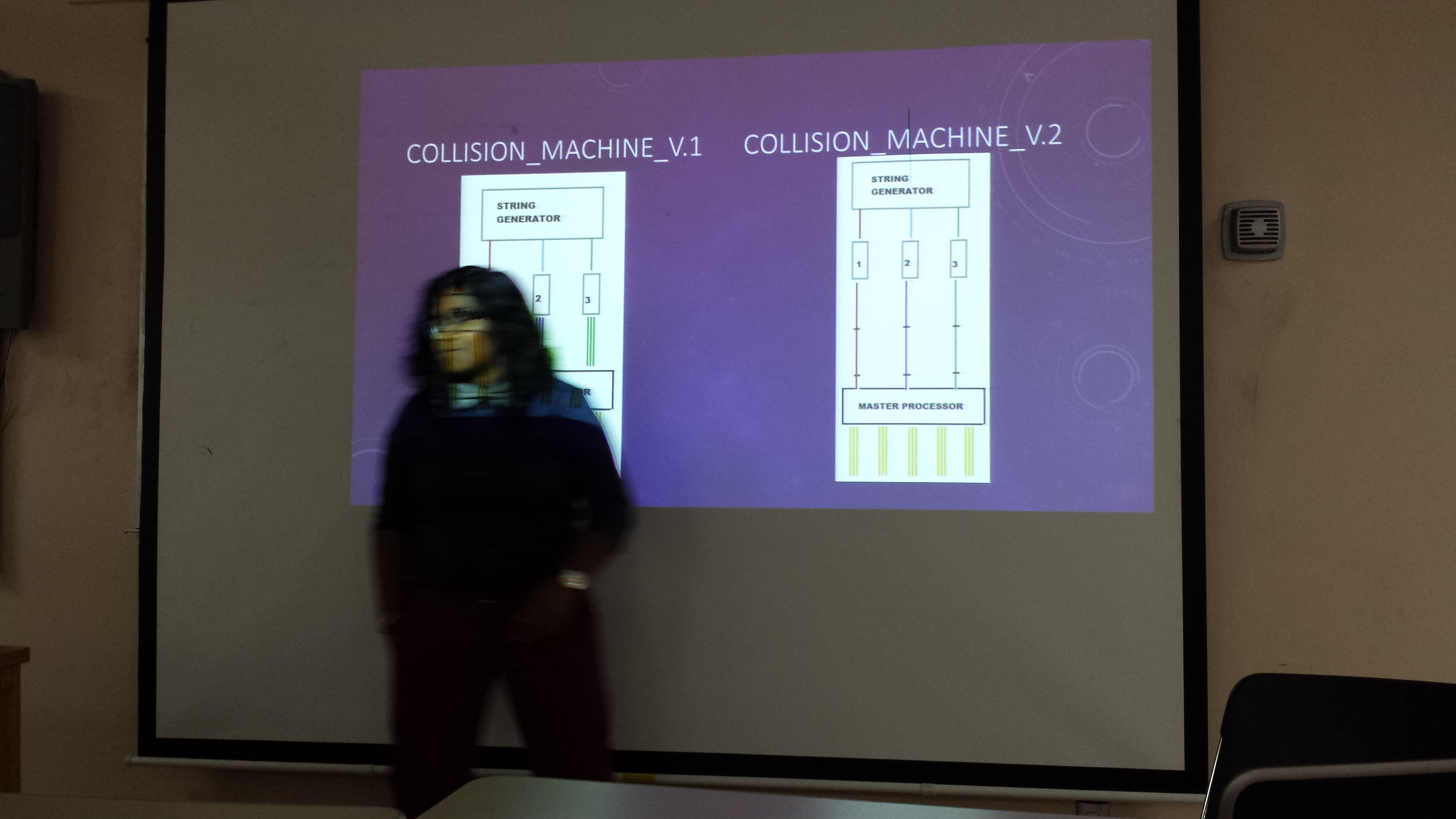

"Finding hash collisions using MPI on HPC clusters" by Melisa Cantu (Texas State University - San Marcos) and Joon Kim (CUNY - College of Staten Island). Mentor: Dr. Xiaowen Zhang.

In cryptography, a hash function is a very important cryptographic primitive with a wide range of applications. There are three required properties for a good hash function, i.e., collision resistance, pre-image resistance, and second pre-image resistance. In this research project, we try to contest these properties on a popular and widely used hash function called MD5, and its two simplified versions that we made. The birthday attack technique was used to test MD5's general collision resistance, while the brute force method was used in the search of pre-image and second pre-image collisions. We calculated the hamming distance to monitor the progress in our search of finding a collision, the smaller the hamming distance the better. Our input domain for the MD5 hash function consisted of hexadecimal bit-strings and strategically generated ASCII character strings. Since the finding of hash collisions demands much more computing power and storage, we wrote C parallel programs in conjunction with the Message Passing Interface (MPI) library that runs over multiple processors / cores in the heavily used CUNY HPC cluster called Penzias. Multiple search / sort / merge algorithms were tested, not only to reduce time and space complexities, but also to improve performance, hash distributions, and the number of arbitrary meaningless or meaningful collisions found.

"Acceleration of saliency detection and segmentation using GPU" by Genesis Rosado-Martinez (University of Puerto Rico at Arecibo) and Colin Hansen (Buena Vista University). Mentor: Dr. Shuqun Zhang.

Saliency detection and segmentation are important steps in many image processing and computer vision applications. They automatically identify and extract visually salient regions from images, which are needed by advanced image processing operations. However, most algorithms for saliency detection and segmentation are compute and data intensive, and hard to meet real-time processing requirement. This project seeks to utilize the parallel processing power of GPU using the CUDA framework and OpenCV library to greatly accelerate saliency detection and segmentation methods. The performance on various image sizes is compared between GPU-based and CPU-based implementations. The results show that real-time saliency detection can be achieved with the GPU acceleration, and the GPU-accelerated saliency segmentation can also provide speedup over the CPU implementation.

"Adaptive algorithm in improving the performance of distributed and parallel particle filter" by Lixin Huang (Syracuse University) and Evan Ferguson-Hull (Bates College). Mentor: Dr. Feng Gu.

Particle filters are essential techniques for modeling and simulation of large-scale, high-dimensional, complex systems. Parallel and distributed particle filters are introduced to improve the performance of the filtering process by distributing particles to multiple processing units. Sampling and resampling are the two major steps in this process. Sampling is easily parallelized because there are no dependencies across the processing units. Resampling, on the other hand, requires global information exchange in order to achieve high accuracy. After resampling, selected particles may need to be routed to other processors in order to maintain an even computational load across all of the processors. Particle routing requires large amounts of inter-processor communications. The communication between processors lowers the efficiency of parallel computing if particles contain too much information. Therefore, it is not necessary to exert the global resampling if the weights of particles didn't degenerate. In this study, a new algorithm named adaptive algorithm was introduced to decide whether the global resampling is needed or not according to an effective and efficient evaluation criteria. The experimental results showed the adaptive algorithm can shorten the computing period with similar accuracy compared to traditional algorithms in distributed particle filter. Our goal is to improve the performance of parallel and distributed particle filters while maintaining accuracy. We hope to achieve this by investigating different routing policies, and how they affect the performance, accuracy, and scalability of the simulation.

Presentation Photos

Summer 2015 Workshop

Presented Projects

- "Network Reliability" by Martin Lapinski (CSI Macaulay Honors College), Helen Lin (CSI Computer Science), and Myles McHugh (Kean University). Mentor: Dr. Louis Petingi.

- "Finding Partial Hash Collisions through Brute Force Parallel Programming" by Vincent Chiriaco (University of North Alabama), Aubrey Franzen (Northern Kentucky University), and Rebecca Thayil (Bryn Mawr College). Mentor: Dr. Xiaowen Zhang.

- "GPU Acceleration for Digital Holographic Image Reconstruction and Processing" by Danielle Lopez (Rowan University) and Ivan Mazo (Kean University). Mentor: Dr. Shuqun Zhang.

- "Using Lattice Boltzmann Method to Model and Simulate Ocean Oil Spill Propagation" by Martin Lapinski (CSI Macaulay Honors College). Mentor: Dr. Zhanyang Zhang

Presentation Videos

They are also on CSIToday - College of Staten Island. Special thanks to Ken Bach for editing the videos and making them available on YouTube.

"Finding Partial Hash Collisions through Brute Force Parallel Programming" by Vincent (Chuck) Chiriaco (University of North Alabama), Aubrey Franzen (Northern Kentucky University) and Rebecca Thayil (Bryn Mawr College). Project: Cryptography. Mentored by Dr. Xiaowen Zhang.

"Finding Partial Hash Collisions through Brute Force Parallel Programming" by Vincent (Chuck) Chiriaco (University of North Alabama), Aubrey Franzen (Northern Kentucky University) and Rebecca Thayil (Bryn Mawr College). Project: Cryptography. Mentored by Dr. Xiaowen Zhang.

A cryptographic hash function is used in data integrity check, digital signature, authentication protocol, etc. A hash function compresses an arbitrary length message into a shorter fixed length digest, or hash value (i.e., fingerprint of the message). If two or more messages are hashed to the same or similar digest, we call this a partial collision. If a collision can be easily found, then the data integrity can be violated and digital signature can be easily forged. By brute force method using parallel programming techniques, students systematically find partial hash collisions for a given target message, i.e., finding other messages that have the similar digest as the digest of the target message.

"GPU Acceleration for Digital Holographic Image Reconstruction and Processing" by Danielle Lopez (Rowan University) and Ivan Mazo (Kean University). Project: Image Processing. Mentored by Dr. Shuqun Zhang.

"GPU Acceleration for Digital Holographic Image Reconstruction and Processing" by Danielle Lopez (Rowan University) and Ivan Mazo (Kean University). Project: Image Processing. Mentored by Dr. Shuqun Zhang.

Students use phase shifting holography to record and reconstruct an object and implement image processing algorithms based upon GPU architectures.

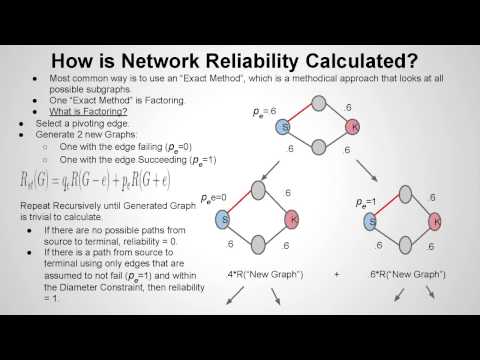

"Network Reliability" by Martin Lapinski (CSI Macaulay Honors College), Helen Lin (CSI, Computer Science), and Myles McHugh (Kean University). Project: Graph Theory. Mentored by Dr. Louis Petingi.

"Network Reliability" by Martin Lapinski (CSI Macaulay Honors College), Helen Lin (CSI, Computer Science), and Myles McHugh (Kean University). Project: Graph Theory. Mentored by Dr. Louis Petingi.

Students explain how Network Reliability Theory can be used to measure performance objectives of different communication networks (e.g., wireless networks) and how Monte Carlo techniques can be applied to efficiently estimate the reliability using parallel processing on a distributed environment.

Presentation Photos